Lost in Translation between R and Python 3

Hello again!

This is the third article in our series “Lost in Translation between R and Python”. The aim of this series is to provide high-quality R and Python 3 code to achieve some non-trivial tasks. If you are to learn R, check out the R tab below. Similarly, if you are to learn Python, the Python tab will be your friend.

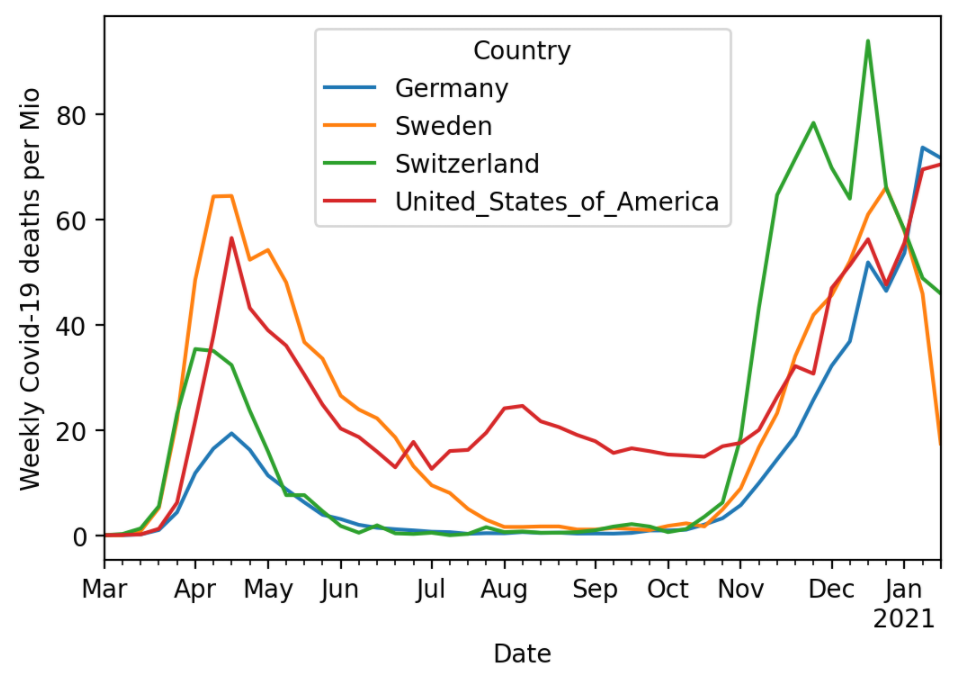

Post 2: https://lorentzen.ch/index.php/2021/01/26/covid-19-deaths-per-mio/

Before diving into the data analysis, I would like to share with you that writing this text brought back some memories from my first year as an actuary. Indeed, I started in life insurance and soon switched to non-life. While investigating mortality may be seen as a dry matter, it reveals some interesting statistical problems which have to be properly addressed before drawing conclusions.

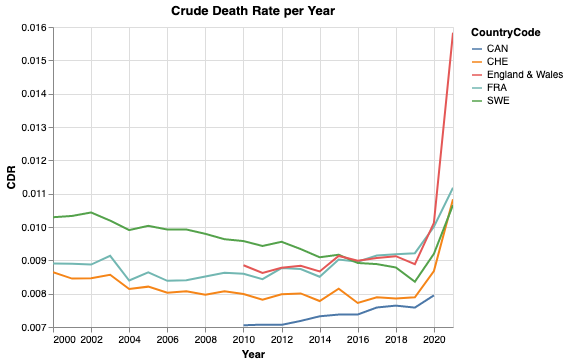

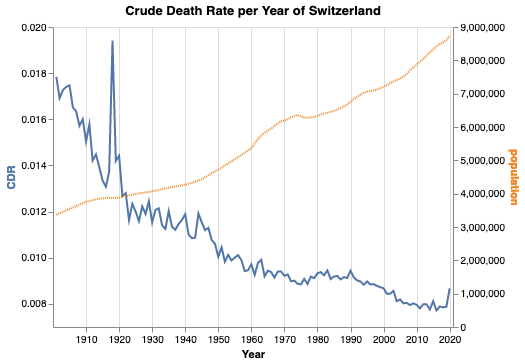

Similar to Post 2, we use a publicly available data, this time from the Human Mortality Database and from the Federal Statistical Office of Switzerland, in order to calculate crude death rates, i.e. number of deaths per person alive per year. This time, we look at a longer time periode of 20 and over 100 years and take all causes of death into account. We caution against any misinterpretation: We show only crude death rates (CDR) which do not take into account any demographic shifts like changing distributions of age or effects from measures taken against COVID-19.

Let us start with producing the first figure. We fetch the data from the internet, pick some countries of interest, focus on males and females combined only, aggregate and plot. The Python version uses the visualization library altair, which can generate interactive charts. Unfortunately, we can only show a static version in the blog post. If someone knows how to render Vega-Light charts in wordpress, I’d be very interested in a secure solution.

library(tidyverse)

library(lubridate)

# Fetch data

df_original = read_csv(

"https://www.mortality.org/Public/STMF/Outputs/stmf.csv",

skip = 2

)

# 1. Select countries of interest and only "both" sexes

# Note: Germany "DEUTNP" and "USA" have short time series

# 2. Change to ISO-3166-1 ALPHA-3 codes

# 3.Create population pro rata temporis (exposure) to ease aggregation

df_mortality <- df_original %>%

filter(CountryCode %in% c("CAN", "CHE", "FRATNP", "GBRTENW", "SWE"),

Sex == "b") %>%

mutate(CountryCode = recode(CountryCode, "FRATNP" = "FRA",

"GBRTENW" = "England & Wales"),

population = DTotal / RTotal,

Year = ymd(Year, truncated = 2))

# Data aggregation per year and country

df <- df_mortality %>%

group_by(Year, CountryCode) %>%

summarise(CDR = sum(DTotal) / sum(population),

.groups = "drop")

ggplot(df, aes(x = Year, y = CDR, color = CountryCode)) +

geom_line(size = 1) +

ylab("Crude Death Rate per Year") +

theme(legend.position = c(0.2, 0.8))import pandas as pd

import altair as alt

# Fetch data

df_mortality = pd.read_csv(

"https://www.mortality.org/Public/STMF/Outputs/stmf.csv",

skiprows=2,

)

# Select countdf_mortalityf interest and only "both" sexes

# Note: Germany "DEUTNP" and "USA" have short time series

df_mortality = df_mortality[

df_mortality["CountryCode"].isin(["CAN", "CHE", "FRATNP", "GBRTENW", "SWE"])

& (df_mortality["Sex"] == "b")

].copy()

# Change to ISO-3166-1 ALPHA-3 codes

df_mortality["CountryCode"].replace(

{"FRATNP": "FRA", "GBRTENW": "England & Wales"}, inplace=True

)

# Create population pro rata temporis (exposure) to ease aggregation

df_mortality = df_mortality.assign(

population=lambda df: df["DTotal"] / df["RTotal"]

)

# Data aggregation per year and country

df_mortality = (

df_mortality.groupby(["Year", "CountryCode"])[["population", "DTotal"]]

.sum()

.assign(CDR=lambda x: x["DTotal"] / x["population"])

# .filter(items=["CDR"]) # make df even smaller

.reset_index()

.assign(Year=lambda x: pd.to_datetime(x["Year"], format="%Y"))

)

chart = (

alt.Chart(df_mortality)

.mark_line()

.encode(

x="Year:T",

y=alt.Y("CDR:Q", scale=alt.Scale(zero=False)),

color="CountryCode:N",

)

.properties(title="Crude Death Rate per Year")

.interactive()

)

# chart.save("crude_death_rate.html")

chart

Note that the y-axis does not start at zero. Nevertheless, we see values between 0.007 and 0.014, which is twice as large. While 2020 shows a clear raise in mortality, for some countries more dramatic than for others, the values of 2021 are still preliminary. For 2021, the data is still incomplete and the yearly CDR is based on a small observation period and hence on a smaller population pro rata temporis. On top, there might be effects from seasonality. To sum up, it means that there is a larger uncertainty for 2021 than for previous whole years.

For Switzerland, it is also possible to collect data for over 100 years. As the code for data fetching and preparation becomes a bit lengthy, we won’t bother you with it. You can find it in the notebooks linked below. Note that we added the value of 2020 from the figure above. This seems legit as the CDR of both data sources agree within less than 1% relative error.

Again, note that the left y-axis does not start at zero, but the right y-axis does. One can see several interesting facts:

- The Swiss population is and always was growing for the last 120 years—with the only exception around 1976.

- The Spanish flu between 1918 and 1920 caused by far the largest peak in mortality in the last 120 years.

- The second world war is not visible in the mortality in Switzerland.

- Overall, the mortality is decreasing.

The Python notebook and R code can be found at: