🚀Version 1.0.0 of the new Python package for model-diagnostics was just released on PyPI. If you use (machine learning or statistical or other) models to predict a mean, median, quantile or expectile, this library offers tools to assess the calibration of your models and to compare and decompose predictive model performance scores.🚀

pip install model-diagnosticsAfter having finished our paper (or better: user guide) “Model Comparison and Calibration Assessment: User Guide for Consistent Scoring Functions in Machine Learning and Actuarial Practice” last year, I realised that there is no Python package that supports the proposed diagnostic tools (which are not completely new). Most of the required building blocks are there, but putting them together to get a result amounts quickly to a large amount of code. Therefore, I decided to publish a new package.

By the way, I really never wanted to write a plotting library. But it turned out that arranging results until they are ready to be visualised amounts to quite a large part of the source code. I hope this was worth the effort. Your feedback is very welcome, either here in the comments or as feature request or bug report under https://github.com/lorentzenchr/model-diagnostics/issues.

For a jump start, I recommend to go directly to the two examples:

To give a glimpse of the functionality, here are some short code snippets.

from model_diagnostics.calibration import compute_bias

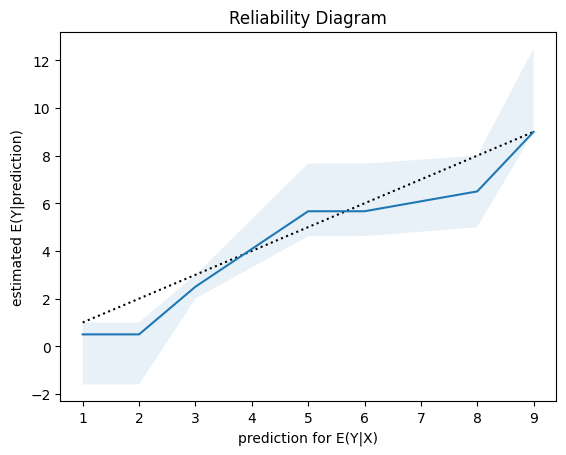

from model_diagnostics.calibration import plot_reliability_diagram

y_obs = list(range(10))

y_pred = [2, 1, 3, 3, 6, 8, 5, 5, 8, 9.]

plot_reliability_diagram(

y_obs=y_obs,

y_pred=y_pred,

n_bootstrap=1000,

confidence_level=0.9,

)

compute_bias(y_obs=y_obs, y_pred=y_pred)

| bias_mean | bias_count | bias_weights | bias_stderr | p_value |

| f64 | u32 | f64 | f64 | f64 |

| 0.5 | 10 | 10.0 | 0.477261 | 0.322121 |

from model_diagnostics.scoring import SquaredError, decompose

decompose(

y_obs=y_obs,

y_pred=y_pred,

scoring_function=SquaredError(),

)| miscalibration | discrimination | uncertainty | score |

| f64 | f64 | f64 | f64 |

| 1.283333 | 7.233333 | 8.25 | 2.3 |

This score decomposition is additive (and unique):

\begin{equation*}

\mathrm{score} = \mathrm{miscalibration} - \mathrm{discrimination} + \mathrm{uncertainty}

\end{equation*}As usual, the code snippets are collected in a notebook: https://github.com/lorentzenchr/notebooks/blob/master/blogposts/2023-07-16%20model-diagnostics.ipynb.

Leave a Reply